Sreeharsha Paruchuri

Hi! I’m Harsha, a graduate student at Carnegie Mellon University’s Robotics Institute, advised by Prof. Katerina Fragkiadaki. I am currently working on explicit 3D scene representations for diffusion- and flow-based Vision-Language-Action models for manipulation tasks.

Previously, at IIIT Hyderabad, I worked with Madhava Krishna on problems in depth estimation and structure from motion for autonomous driving, as well as visual-inertial SLAM for indoor robots. With Prof. Vinoo Alluri’s guidance, I analyzed how lyrical regularities in music listening history correlate with early indicators of mental disorders.

At CMU, I specialized in generative AI and robot learning methods, where I aligned LLM outputs to generate physically stable 3D structures and used online reinforcement learning to adapt Vision-Language-Action models to unseen tasks. For my capstone project, I built an augmented-reality and robot-assisted knee replacement system, using RGB-D data to achieve real-time millimeter-accurate point cloud registration. To enhance the doctor-in-the-loop experience, I designed a pipeline that leveraged 2D foundation model embeddings and latent clustering to autonomously position the robot for optimal surgical visibility.

Professionally, I have led research on optimizing robot navigation by combining information from audio signals with RGB-D data in unseen indoor environments using Reinforcement Learning. More recently, I have accelarated HD-Mapping pavement marking workflows, crucial for safe autonomous vehicle operation, by fusing dense, multi-view 2D-3D correspondences.

Outside of work I might be reading with a cup of coffee, driving down a National Highway or at a Coldplay concert 🌌🪐☄️⋆。゚

Research Interests: 3D Computer Vision, Multimodal Perception, Robot Learning, Human–Computer Interaction

Email / LinkedIn / Github / Google Scholar / Physical AI Resume / ML/CV Resume

News |

Industry Experience |

|

Mach9

May 2025 - Aug 2025

Focus: Developed CUDA-accelerated 2D-3D feature correspondence pipeline and fine-tuned Vision-Language Models to speed up quality assurance in outdoor surveying systems

• Developed and deployed Pavement Symbol Extraction functionality to the Digital Surveyor software via CUDA-accelarated coordinate frame transformations and segmentation masks.

• Implemented unit and CI testing pipelines with GitHub Actions to validate CUDA kernels and vector-field clustering modules, ensuring production-grade reliability. • Fine-tuned a Vision-Language Model (VLM) to implement a secondary-inference pipeline to classify extracted open-set painted symbols according to user specifications. • Conducted 70+ controlled ablation experiments with A/B testing on Hungarian Assigner Costs, Loss weights, Model Queries, Multi-Scale Deformable Attention and encoder-decoder expressivity to boost the performance of the production model by 4%. • Utilised methods from Object-Detection literature to qualitatively capture a DETR-based polyline detection model's uncertainty to expedite downstream Quality Assurance and Quality Control processes, saving company and customer resources. |

|

Tata Consultancy Services Research

July 2022 - July 2024

Focus: Led research in the realm of cognitive robotics, emphasizing navigation that focussed on audio-visual feature correspondence and reinforcement learning for active SLAM.

• Audio-Visual Navigation: Led development of embodied AI agent with multimodal sensing, training online RL policy with novel class-agnostic reward, reducing path length by 21%

• Offline RL for Indoor Robot Navigation: Built simulation pipeline to collect large-scale trajectory datasets, training a Causal Decision Transformer with early multimodal fusion; integrated environment randomization, behavior cloning baselines, and replay buffer curation to improve policy robustness and sample efficiency • CLIP-Enhanced Scene Graphs: Designed contrastive-learning framework to compute visual-language embeddings, leveraging GNNs to model object-region relationships • Open Vocabulary Manipulation (NeurIPS 23): Developed active SLAM exploration algorithm conditioned on probabilistic semantic map, improving task success by 60%. • Volunteered for the Project Synergy initiative by TCS wherein volunteers taught written and spoken English to students in a Bangla-medium government school. |

|

Robotics Research Center (RRC, IIIT-H)

Jan 2020 - June 2022

Focus: Worked on dense 3D reconstruction and utilizing SLAM techniques such as pose-graph optimization indoor and outdoor autonomy on self-driving vehicles.

• Autonomous Sanitization Robot: Designed and implemented end-to-end robotic system during COVID-19 to autonomously sanitize indoor spaces, integrating computer vision, Visual-SLAM, and coverage-based navigation

• Sim-to-Real Deployment: Built Gazebo simulation environments for iterative testing, then transferred stack to hardware platform with onboard sensors and sanitization actuators; finished runner-up among 140 teams • LiDAR SLAM: Evaluated LiDAR odometry and mapping approaches such as LOAM using CARLA simulation and outdoor driving data, analyzing localization accuracy and map consistency • Depth Estimation: Implemented stereo and monocular depth estimation methods on driving datasets including KITTI and NuScenes, developing a ROS package for multi-view bundle adjustment |

I have also been a part of

• Augmented difficult-to-obtain real-world LiDAR datasets using synthetic data from generative models and physics engines, improving 3D object detection networks for outdoor scenarios

• Scraped Reddit data to link music-sharing trends with mental health during COVID-19 using BERT embeddings and DBSCAN clustering; Published in medical journal

Selected Projects |

|

Augmented-Reality and Robot Assisted Knee Surgery

Gathered and analyzed requirements from user studies, market competition, and sponsors to inform system development. Processed 3D and RGB information from the Apple Vision Pro to detect bone models in the environment via ICP registration.

• Project Leadership: Led a 5-person team as Project Manager, driving scheduling, sponsor communication, and system integration for an AR-assisted surgical robotics platform

• Accuracy-driven Perception: Achieved sub-4 mm drilling accuracy in total knee arthroplasty using a KUKA MED7 arm with multi-stage pointcloud registration (SAM2 + ICP) • AR Integration: Integrated Apple Vision Pro for dynamic bone tracking and real-time surgeon-in-the-loop planning across long surgical horizons • Motion Planning: Designed and deployed a ROS + MoveIt planning subsystem that adaptively updates as surgical pins are drilled, enabling safe trajectory generation • Hardware Development: Built a custom 3D-printed drill end-effector with embedded control electronics, activated via trajectory execution for autonomous drilling |

|

Reinforcement Learning with Robotic Foundation Models for Manipulation

Extended OpenVLA-OFT, a 7B parallel-decoding VLA, with online PPO fine-tuning via a dual-head architecture. Preserving a frozen L1 regression head ensures safe deterministic rollouts while a stochastic policy head trained with GRPO advantages learns from environment interaction. Improved task success from 80% → 98% on LIBERO-Spatial while preserving 100 Hz closed-loop inference.

• Dual-Head Architecture: Frozen L1 regression head (167M params) executes deterministic actions during rollouts; a stochastic tokenized head maps VLA hidden states [B, 56, 256] to a categorical action distribution — PPO gradients flow through the VLA backbone to update 55.4M LoRA parameters without touching the frozen 7.6B base

• Two-Stage Training: Behavior cloning warmup (lr 5×10⁻⁷, cross-entropy) distills the deterministic policy into the stochastic head until ~80% validation success; PPO stage with GRPO advantages (clip ratios 0.2/0.28, γ=0.99, λ=0.95) then runs for 25k steps, pushing success to ~98% • Results: Evaluated on LIBERO-Spatial (10 pick-and-place tasks, 50 rollouts each); stochastic policy entropy remained stable throughout PPO training, confirming the policy did not collapse |

|

BrickDPO: Post-Training LLMs for Physically Stable 3D Generation

Fine-tuned LLaMA-3.2-1B-Instruct with DPO + Q-LoRA on a curated stability preference dataset, where winning and losing brick sequences were ranked by a physics solver without human supervision. Baking structural stability directly into the LLM reduced mean regenerations by 57% while preserving brick stability scores.

• Preference Dataset: Ran BrickGPT inference with physics-aware rollback to collect 3,366 training triples (context, winning sequence, losing sequence) across the

car class; filtered by sequence length to fit compute budget• DPO Loss: Optimised the Bradley-Terry objective over stable vs. unstable brick continuations; partial stable prefixes shifted into the prompt to avoid penalising valid early placements • Ablation: Including partial structures in the prompt reduced mean regenerations from 5.11 → 3.92 vs. 9.24 for the BrickGPT baseline, with stability scores unchanged |

|

CMU VLA Challenge

Built a Vision-Language Navigation (VLN) system that answered natural language queries by combining Gemini 2.5 Pro embodied reasoning with a custom ROS state machine. The system produced numerical answers, object references, or waypoint plans under a strict 10-minute limit.

• Natural Language Understanding: “closest to the window” used

Gemini 2.5 Pro to classify and intelligently reason over spatial relations• State Machine: designed a ROS state machine to coordinate exploration, mapping, and answering with dynamic transitions • Deployment Ready: Deployed the system on a real robot for the challenge through clean docker containerization. |

|

ReMov3r: Real-Time Monocular Video to 3D Reconstruction

Engineered a transformer-based architecture leveraging cross-attentional feature fusion with adaptive keyframe selection to model long-range spatio-temporal dependencies via causal self-attention over sliding windows. Enforced multi-view geometric consistency via confidence-guided depth refinement, achieving a 53% reduction in absolute trajectory error over the SOTA baseline on 7-Scenes.

• Architecture: Asymmetric cross-attention decoders fuse Depth Anything V2 geometric priors with DINOv2 visual tokens via RoPE spatial encoding; DPT heads produce dense pointmap and depth outputs

• Losses: Confidence-aligned pointmap regression ( ConfAlignPointMapRegLoss), confidence-aligned depth regression (ConfAlignDepthRegLoss), and quaternion + translation pose regression loss jointly optimised• Memory: Dual local/global causal self-attention streams with cosine-similarity keyframe selection decouple frame-level coherence from scene-level consistency |

|

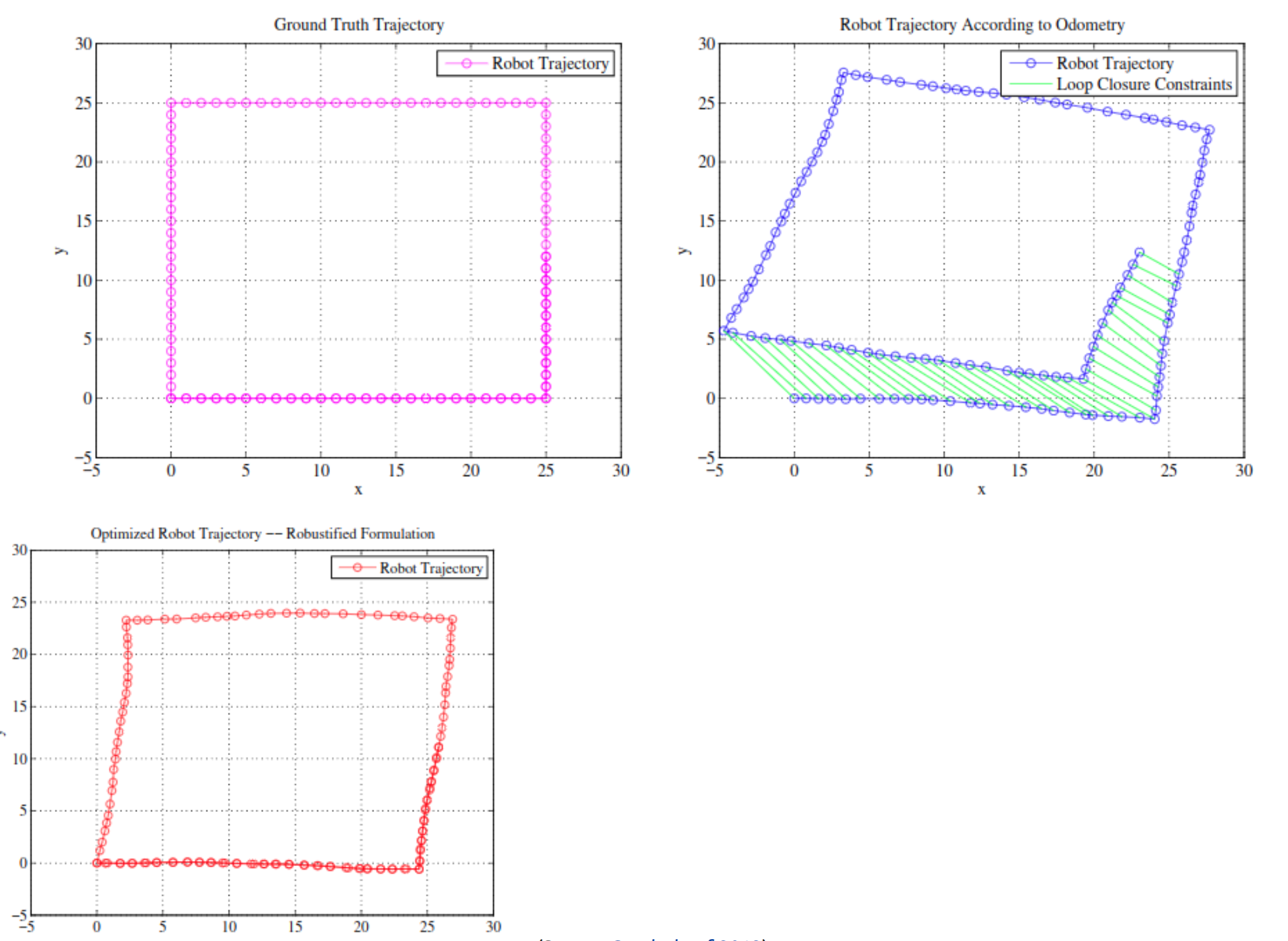

Pose Graph Optimization for 2D SLAM

Implemented a 2D SLAM backend where noisy odometry and loop closure constraints were refined into a globally consistent trajectory. Used jax to compute residuals and Jacobians, applied nonlinear least-squares optimization, and validated improvements with RPY and APE error metrics. Explored the role of confidence weighting in the information matrix and compared against g2o optimization with robust kernels.

• Iterative Optimization: Built custom nonlinear solver in JAX with residual and Jacobian computation for pose updates

• Loop Closures: Studied effect of varying odometry vs loop closure confidence weights on trajectory quality visualized in g2o_viewer • Error Evaluation: Quantified improvements via RPY drift and Absolute Pose Error reduction compared to initial odometry • Literature Review: Analyzed “Past, Present & Future of SLAM” survey, contextualizing open problems in robustness and scalability with deep learning-based approaches |

Education |

|

Carnegie Mellon University, School of Computer Science

Aug 2024 - May 2026

CGPA: 4.11/4.0 Teaching: Introduction to Deep Learning, Robot Autonomy and Manipulation Coursework: Learning for 3D Vision , Generative AI, Deep RL, Diffusion and Flow Matching

• Learning for 3D vision: 3D generation, volume rendering + NeRFs, gaussian splatting + diffusion-guided optimization, Classifier-Free Guidance, PointNet classification and segmentation

• Generative AI: grouped query attention + RoPE in GPT-2, diffusion models and VAEs, parameter-efficient fine-tuning + DPO, In-Context Learning, Mixture of Experts, paper review • Deep reinforcement learning: policy gradients, Q-learning, Performance Difference Lemma, actor-critic methods, Proximal Policy Optimization, evolutionary methods, DAgger, Imitation Learning • Robot autonomy, mobility and control: grasping, Kalman filtering, control for drones, humanoids • Advanced computer vision: homography, Lucas-Kanade tracking, photometric stereo • Systems engineering: functional architecture, unit tests, project management |

|

International Institute of Information Technology, Hyderabad

Aug 2018 - July 2022

Major GCPA: 9.02/10 Awards: Deans Merit List, Undergraduate Research Award Coursework: Statistics in AI, Topics in Applied Optimization, Mobile Robotics

• Statistical methods in AI: PCA, SVMs, Bayesian inference, logistic regression, image classification

• Applied optimization: linear programming, convex optimization, singular value decomposition • Computer vision: camera calibration, SIFT, grab-cut, Mask-RCNNs, bag of words, Viola-Jones • Mobile robotics: pose-graph optimization, epipolar geometry, RRT, non-linear optimization • Digital image processing: edge detection, morphological operations, alpha blending • Data structures and algorithms: graph algorithms, dynamic programming, complexity analysis • Operating systems: process management, memory allocation, file systems, concurrency • Linear algebra: matrix operations, eigenvalues, vector spaces, linear transformations • Compilers: lexical analysis, parsing, code generation and grammar • Game theory: Nash equilibrium, mechanism design |

Teaching Experience |

|

Carnegie Mellon University

Course Website Description: Comprehensive graduate-level course covering neural networks, CNNs, RNNs, transformers, and modern deep learning architectures.

• Created educational content including slides and tutorials for NumPy fundamentals and Loss functions (Focal Loss, Chamfer Loss, RLHF)

• Designed a Colab Notebook and made slides to lead a lab on Backprop and Training Convolutional Neural Networks. • Collaborated with instructional team to revise and update homework assignments for RNNs, GRUs, Transformers, Language Generation, Diffusion models and PEFT • Conducted over 40 hours of office hours, labs, and hackathon events, providing hands-on instruction and problem-solving support for undergraduate and graduate students |

|

International Institute of Information Technology, Hyderabad

Courses: Mobile Robotics, Music Mind and Technology, Introduction to Coding Theory

• CS7.503.M21: Mobile Robotics: The most renowned course of IIIT-H. Provides students with a comprehensive toolkit for research at the intersection of Robotics and Computer Vision, covering SLAM, Computer Vision and Planning algorithms

• CS9.434.S22: Music, Mind and Technology: An interdisciplinary course using algorithms and mathematics to explore how music is perceived by individuals and groups. Served as head TA, designing evaluations for over 60 graduate and undergraduate students • EC5.205.S21: Introduction to Coding Theory: A fascinating subject building on Shannon's Theory of Communication, exploring the mathematical foundations that underpin everyday communication systems |

Publications |

|

How Much do Lyrics Matter? Analysing Lyrical Simplicity Preferences for Individuals At Risk of Depression

International Speech Communication Association (INTERSPEECH) 2021arXiv Analyzed how lyrical regularities in music listening histories correlate with early indicators of mental health disorders through computational analysis of audio-visual features and linguistic patterns. |

|

Analysing music discourse & depression on Reddit

arxiv / code Applied BERT-based sentiment analysis and k-means clustering to uncover nuanced links between language and acoustic music features in data scraped from mental health related subreddits during COVID-19. This research contributed to understanding the relationship between music and mental health through computational methods.

• BERT Sentiment Analysis: Fine-tuned transformer models on mental health discourse to extract emotional patterns from 50k+ Reddit posts, achieving 87% accuracy in mood classification

• Music Information Retrieval: Developed acoustic feature extraction pipeline using librosa and essentia to correlate musical elements (tempo, key, valence) with psychological states • COVID-19 Impact Study: Applied k-means clustering and statistical analysis to identify significant behavioral shifts in music consumption patterns during pandemic, published findings at INTERSPEECH 2021 |

Technical Skills |

|

Languages: Python, C++, MATLAB, CUDA, Java, Go, Swift

ML/AI: PyTorch, TensorFlow, Scikit-learn, PyTorch3D

Tools: ROS2, Unity 3D, OpenCV, XCode, Django, Git

Miscellaneous: Rust, JAX, Docker, Kubernetes, AWS, GCP |

|

Original Template taken from here! |